QEMU virtualization

Updated 24 January 2020

Kernel-based Virtual Machine (KVM) is a software-based virtualization solution that supports hardware virtualization based on Intel VT (Virtualization Technology) or AMD SVM (Secure Virtual Machine).

QEMU is a free open source software emulator on different platforms that can run without KVM as well, but using hardware virtualization significantly accelerates guest systems, therefore using KVM in QEMU (enable-kvm) is the preferred option.

Initially, the development was part of the Linux KVM (Kernel-based Virtual Machine) project, which, in addition to KVM itself (support for hardware virtualization technologies of x86-compatible processors at the Linux kernel level), was about developing patches for QEMU, thus allowing QEMU to use KVM functionality.

However, QEMU developers, in cooperation with their KVM counterparts have recently decided to integrate KVM support into the mainline of QEMU.

With KVM, you can run multiple virtual machines without having to modify either the Linux or Windows image. Each virtual machine has its own private environment in which it operates, including a network card, a drive, a graphics adapter, etc.

This Linux kernel supports KVM since version 2.6.20.

libvirt is an interface and a daemon for managing various virtual machines (qemu/kvm, xen, virtualbox). It allows you to easily configure and control virtual machines. It also provides a variety of third-party management tools, web interfaces, etc. For example, we recommend virt-manager, a graphical interface for managing virtual machines.

Installing the necessary packages

By default, QEMU supports the i386 and x86_64 architectures. Edit the flags to provide support for more. For example, if you need arm to be supported:

QEMU_SOFTMMU_TARGETS="arm i386 x86_64"

To install QEMU and libvirt, run:

emerge -a app-emulation/qemu app-emulation/libvirt

QEMU setup

Edit the configuration file:

# Allows VNC to listen everywhere vnc_listen = "0.0.0.0" # Disable TLS (if virtual machines are not being used for testing, to enable and setup TLS is preferable) vnc_tls = 0 # Default password for VNC, to be used if the virtual machine has no password of its own vnc_password = "XYZ12345" # User that will start QEMU user = "root" # Group that will start QEMU group = "root" # Saving and dumping format. Using gzip or other compression will reduce the space required for images, but also increase the time needed to save them. save_image_format = "gzip" dump_image_format = "gzip"

Start the libvirt daemon and add it to autostart:

/etc/init.d/libvirtd start

rc-update add libvirtd

You will also need the tun and vhost_net modules for the virtual network. Load the necessary modules:

modprobe -a tun vhost_net

...and add them to autostart:

tun vhost_net

Creating a virtual machine

First of all, create an image for your hard drive. By default, it is dynamically scalable:

qemu-img create -f qcow2 /var/calculate/vbox/hdd.qcow2 20G

If you allocate all the space to one volume, the virtual machine will work faster, because it will not need to switch between volumes at operation time. To create this image, please run:

qemu-img create -f qcow2 -o preallocation=metadata hdd.qcow2 20G

Here is a configuration example:

<domain type='kvm' id='1'> <features><acpi/></features> <name>testkvm</name> <description>Description of server</description> <memory unit='KiB'>1048576</memory> <vcpu placement='static'>1</vcpu> <os> <type arch='x86_64' machine='pc-1.3'>hvm</type> <boot dev='cdrom' /> <boot dev='hd' /> <bootmenu enable='yes'/> </os> <devices> <emulator>/usr/bin/qemu-system-x86_64</emulator> <disk type='file' device='disk'> <driver name='qemu' type='qcow2' cache='writeback' io='threads'/> <source file='/var/calculate/vbox/hdd.qcow2'/> <target dev='vda' bus='virtio'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <source file='/var/calculate/linux/css-18.12-x86_64.iso '/> <target dev='vdd' bus='virtio'/> <readonly/> </disk> <interface type='network'> <source network='default'/> </interface> <graphics type='vnc' port='5910' autoport='no'> <listen type='address'/> </graphics> </devices> </domain>

The following configuration is used in the example above:

- 1GB of RAM

- 1 CPU core

- 64-bit system

- Calculate Scratch Server 18.12 to be installed

- a VNC server on port 5910

For more information about the virtual machine configuration file, see [here] (http://libvirt.org/formatdomain.html).

Add a machine, configuration-based:

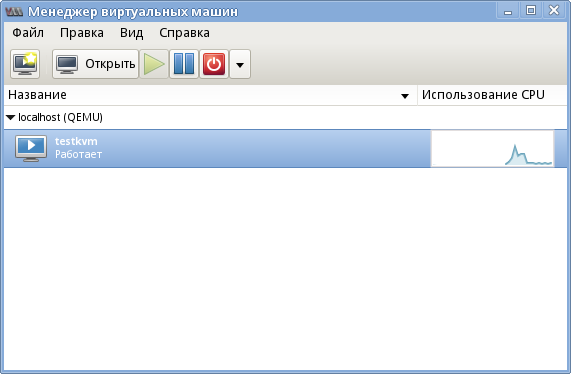

virsh define testkvm.xml

To launch the machine, run:

virsh start testkvm

To stop the machine, run:

virsh destroy testkvm

This is an emergency stop, comparable to turning off the power.

ACPI support must be installed on the guest machine to support normal shutdown and restart. If you are using Calculate Linux as a guest machine, you can do this as follows:

emerge -a sys-power/acpid

rc-update add acpid boot

To enable autostart of the guest machine at the start of the libvirt daemon, type:

virsh autostart testkvm

To disable the autostart mode, run:

virsh autostart --disable testkvm

To run a virtual machine without libvirt, run:

qemu-system-x86_64 -name calculate -cpu host -smp 4 -enable-kvm -localtime -m 2048 -no-fd-bootchk -net nic,model=virtio,vlan=0 -net user,vlan=0 -drive file=hdd.img,index=0,media=disk,if=virtio -drive file=/mnt/iso/cldx-20150224-i686.iso,media=cdrom -monitor telnet:0.0.0.0:4008,server,nowait -spice port=5901,disable-ticketing -vga qxl -usb

Working with Virtio devices

When using normal virtual drives and network cards, the system works as described below. The guest system is provided with copies of real devices. In the guest system, the device drivers convert high-level queries into low-level queries, the virtual system intercepts them, converts them into high-level ones and passes them on to the host system drivers. When using virtio devices, the chain is shortened. Virtio drivers do no conversion, they only transmit high-level queries directly to the host system, resulting in faster operation of the virtual machine.

Drive switching commands

General syntax:

change-media [HTML_REMOVED] [HTML_REMOVED] [[HTML_REMOVED]] [--eject] [--insert] [--update] [--current] [--live] [--config] [--force]

To insert the disc.iso image into the virtual drive, run:

change-media guest01 vdd disc.iso

To remove the image from the virtual drive, run:

change-media guest01 vdd --eject

Network configuration

Basic settings default to a virtual network that is not accessible from the outside. IP access can be obtained on a computer with QEMU on board. From the inside, access is via NAT.

Here are the possible network configurations:

- NAT Based is the default option. An internal network provides access to an external network with NAT applied automatically. The above configuration example uses this option.

- Routed is similar to the previous, as it is an internal network that provides access to the external network, but without NAT. It assumes additional configuration of routing tables is made in the external network.

- An isolated IPv4/IPv6 network.

- A bridge connection. Allows you to implement a variety of configurations, including IP assignment in a real network.

- A network card PCI redirected from the host machine to the guest machine.

For more information on network configuration, please check the following links: